This article opens a series from which you can learn:

- what artificial intelligence is

- the types of artificial intelligence

- the history behind AI

- the Turing test and its limitations.

I tried to summarize my knowledge in this area of computer science in a manner that would be easily accessible to technical engineers or even professors who want to expand their knowledge.

I consider this guide as a state-of-the-art of our knowledge on Artificial Intelligence at the beginning of the second decade of this century and even I, personally, am looking forward to reviewing this ten years from now and to better observe the evolution.

So what is artificial intelligence?

Artificial intelligence or AI is a domain of computer science aimed at the development of computers capable of doing things that are usually done by humans, or things associated with humans acting intelligently. It is the science of finding theories and methodologies used for making the machine intelligent, which are controlled by software inside them so that they can perform what humans do in their daily life. Primarily, AI is the simulation of human intelligence processes by machines, especially computers.

These processes include learning, reasoning, and self-correction. Learning deals with using rules to reach approximate or definite conclusions.

In artificial intelligence, a machine can have human-based skills such as learning, reasoning, and solving problems. By using artificial intelligence, the user does not need to reprogram a machine to do some tasks, despite the fact that they can create a machine with programmed algorithms that can work with its own intelligence.

This is exactly what AI is all about.

It is assumed that AI is not a new technology. And some people say, that according to myths, there were mechanical men in the early days that could work and behave like humans.

Check here for further details: 7 early robots and autonomous machines:

To create a machine with artificial intelligence, we must first know how intelligence is composed. So, intelligence is an intangible part of our brain, which is a combination of:

- reasoning,

- learning,

- problem solving,

- perception,

- language,

- understanding etc

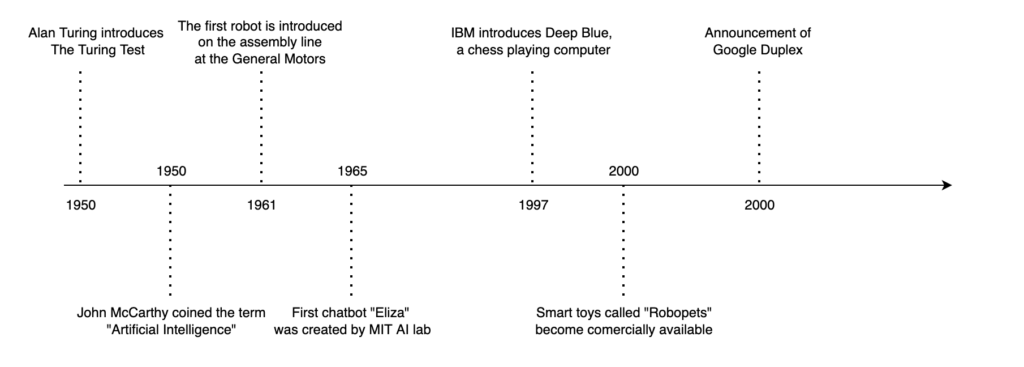

History of AI

The field of AI was born at a workshop at Dartmouth College in 1956. Herbert Simon (CMU), Alan Newell (CMU), Marvin Minsky(MIT), John McCarthy (MIT), and Arthur Samuel (IBM) became the leaders and founders of AI research. They, along with their students produced programs that the press described as astonishing in which computers were learning the checker strategies.

And by 1959, these machines were reportedly playing better than the average human.

Since 1956 AI has experienced several waves of optimism, followed by disappointment, and the loss of funding, followed by success, new approaches, and rebuild funding. For most of its history, AI research has been categorized into sub-fields that usually fail to interact with each other. These sub-fields are based on technical difficulties, such as precise goals, the use of distinct tools, or profound philosophical differences. These sub-fields have also been based on social factors. Some of the goals of artificial intelligence include replicating human intelligence, solving knowledge-intensive tasks, and building a machine that can perform tasks that require human intelligence, such as proving a theorem, planning some surgical operation, playing chess, or driving a car in traffic.

While AI tools grant a range of new functionalities for businesses, the use and implementation of artificial intelligence raise ethical questions as deep learning algorithms that underpin many advanced AI tools. There are as smart as the data that is fed to them and training. As a human selects the data that is used for training in AI programs, the potential for human inclination is inherent in must be monitored precisely. Some experts believe that the term AI is closely linked to the culture, creating unrealistic fears to the general public about AI, and improbable expectations about how it will change the workplace and life in general. Marketers and researchers hope that the label augmented intelligence has a more neutral meaning that will help the general public to understand that AI will simply improve products and services and not replace the humans that use them.

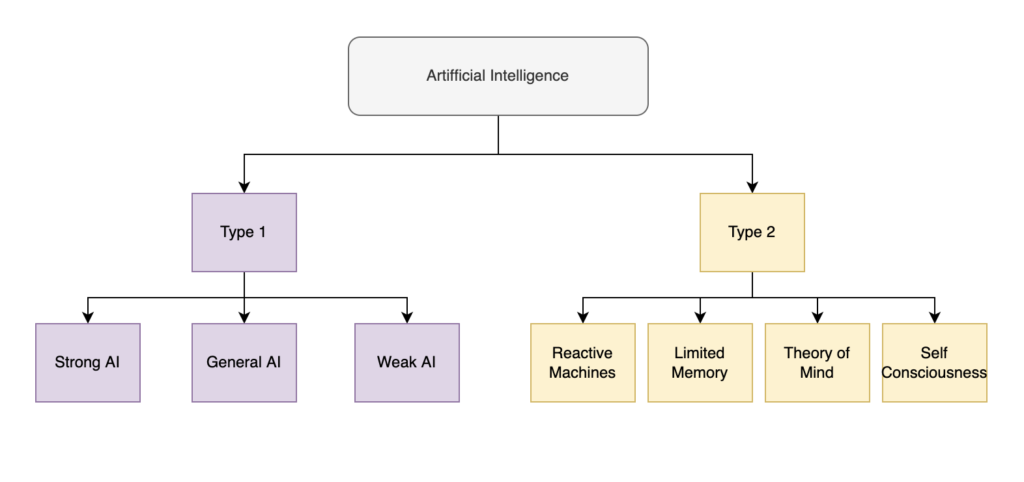

Nowadays, AI is categorized as Type 1 and Type 2[5].

Type 1 AI are based on the categories and are further classified as strong AI, general AI, and weak AI.

Type 2 AI are based on functionalities and they’re classified as reactive machines, limited memory, theory of mind, and self-awareness.

Artificial Intelligence is incorporated into different types of technologies, like:

- automation,

- machine learning,

- robotics,

- NLP,

- machine vision,

- self driving cars etc.

Also, AI has made its way into a number of areas like healthcare, business, education, finance, law, and manufacturing.

In health care, machine learning is used to make better and faster diagnoses than humans. The best example in the field of healthcare is IBM Watson which understands natural language and is capable of responding to questions that are asked the system minds the patient data, and other data sources provided to form a hypothesis that it presents with a confidence scoring schema[6].

In business for highly repetitive tasks, robotic process automation is being applied, which are initially performed by human beings.

Machine learning algorithms are integrated within analytics to uncover information on how to better serve customers. Companies use chatbots to provide immediate service to customers. If we talk about education, AI can help in automating the grading system, providing access to students and adapting their needs and requirements, helping them to work at their own pace, and providing tutors that can provide additional support to students.

The period between 1943 to 1952 is known as the maturation period in the history of AI.

To be observed that AI concepts are evolving separately from computer technologies[7].

In the year 1943, a model of artificial neurons was proposed by Warren McCulloch and Walter Pitts.

In the year 1949, Donald Hebb demonstrated an updating rule for modifying the connection strength between neurons, named as Hebbian learning[8].

In 1950, Alan Turing, an English mathematician pioneered machine learning, he publishes Computing Machinery and Intelligence[9] in which he has proposed a test named The Turing test[10]. The test checks the machine’s ability to exhibit intelligent behavior equivalent to human intelligence.

The period from 1952 to 1956, is known as the birth period of AI.

In the year 1955. Alan Newell and Herbert A Simon created the first artificial intelligence program named Logic theorist[11]. This program has proved 38 of 52 mathematic theorems and provided new and more elegant proofs for some theorems.

In the year 1956, the word Artificial Intelligence was adopted by American computer scientist John McCarthy at the Dartmouth conference for the very first time. AI is coined as an academic field also. During that time, high computer languages like Fortran, COBOL, and Lisp were invented. Then it came the golden years[12], starting from 1956 to 1974.

In 1966, the researchers emphasized developing algorithms that can solve mathematical problems. Joseph Weizenbaum created the first chatbot, which was named Eliza[13].

In the year 1972, the first intelligent humanoid robot was built in Japan, which was named WABOT[14].

The duration of the years 1974 to 1980, was the first AI winter season. AI winter indicates the time period where computer scientists dealt with a severe shortage of funding from the government for AI research. During this period, the public interest in artificial intelligence decreased drastically.

In 1980, ai came back with an expert system, expert systems were programmed to emulate the decision-making ability of a human expert. In the same year, the first national conference of the American Association of artificial intelligence was held at Stanford University.

AI experienced another major winter from 1987 to 1993. Coinciding with the downfall of the market for some of the general-purpose computers, and downsizing the government funding, investors and the government once again stopped the funding for AI research, due to the high costs involved, and the inefficient results that were produced.

In the year 1997 IBM’s Deep Blue[14] beat the global chess champion Garry Kasparov and became the first computer to beat a global chess champion.

In the year 2002, for the first time, AI entered homes in the form of Roomba, a vacuum cleaner.

Till 2006 AI became a part of the business world. Companies like Facebook, Twitter, and Netflix started using AI.

In the year 2011 IBM’s, Watson won a quiz show named as Jeopardy[15] by beating reigning champions Brad Rutter, and Ken Jennings, where it had to solve complex questions and puzzles. Watson had proved that it could easily understand natural language, and also solve tricky questions quickly.

Later, in the year 2012, Google launched an Android app named Google Now, which was able to provide information to the user in the form of a prediction.

In the year 2014. A chatbot named Eugene Guzman[16] won a competition in the famous Turing test.

In the year 2018, the project debater from IBM debated on complex topics with two master debaters and performed extremely well. Google has also demonstrated an AI program named duplex, which was a virtual assistant that had taken hairdresser appointments on call, and no one was able to make out that they were actually conversing with a machine.

At present, AI has developed to a remarkable level. The concept of Big Data and Deep learning in data science are now trending in the market like a boom. Today, companies like Google, Facebook, IBM, and Amazon are working with AI and creating amazing devices.

Types of AI

As described previously, AI is categorized as type 1 and type 2.

Type 1 AI

It is based on the categories and is further classified as:

- strong AI,

- general AI and

- weak AI

Strong Artificial Intelligence or true intelligence is a general form of machine intelligence that is equal to human intelligence. The key characteristics of strong AI include solving puzzles, the ability to reason, make judgments, learn, plan and communicate. It also has consciousness self-awareness, objective thoughts, and sentience. Strong AI’s goal is to develop AI to the point where the machine’s intellectual capability is functionally equal to the human’s intellectual capabilities. It was said that strong AI does not exist currently, some experts predict that it may be developed by 2030. Others predict that it may be developed within the next century, or the development of strong AI may not be possible at all.

Some experts argue that a machine with strong AI should be able to go through the same development process as a human being, starting with a childlike mind and developing the mind of an adult through learning.

These machines should be able to interact with the world and learn from it, acquiring their own common sense and knowledge.

General AI allows the machine to apply knowledge and skills in different contexts. It closely mirrors human intelligence by providing opportunities for problem-solving. The idea behind general AI is that the system would possess the cognitive abilities and general experiential understanding of its environment that humans possess. With the ability to process the data at much greater speeds. This implies that the system would become exponentially greater than humans in the areas of cognitive ability, knowledge, and process, and speed.

Weak AI or narrow AI is an approach to artificial intelligence research and development, with the consideration that AI is always a simulation of human cognitive function, and that computers can only appear to think, but are not actually conscious in any sense of the word. Weak AI simply acts upon actions and is bound by the rules imposed on it, and it cannot go beyond those rules. Weak AI is specifically designed to focus on a narrow task that seemed to be intelligent. Weak AI never practices general intelligence, but it constructs a design in the narrow task that it is assigned to simulate human cognition, and benefits mankind by automating time-consuming tasks, and by analyzing data in ways that humans sometimes can’t. The classic example that illustrates this statement is John Searles Chinese Room thought experiment. This experiment states that a person outside room A may be able to have a conversation in Chinese, with a person inside room A, and the person inside the room is getting instructions about how to respond to those conversations in Chinese. The person inside the room would seem to speak Chinese, but in reality, they couldn’t actually speak or understand a word of it without any instructions that are being provided. That’s because the person is good at following instructions and not speaking Chinese.

The weak AI systems have specific intelligence, not general intelligence. Weak AI helps in turning big data into usable information by detecting patterns in making predictions. Email spam filters are a general example of a weak AI system, where a computer uses an algorithm to learn which messages are spam messages, and then redirects them from the inbox to the spam folder.

Type 2 AI

It is based on functionalities, and it’s classified as:

- reactive machines,

- limited memory,

- theory of mind and

- self awareness.

Reactive machines. The most basic kind of AI systems are purely reactive and have the ability neither to use any past experience to inform current decisions nor forum memories. This kind of AI intelligence includes a computer seeing the world directly in acting on what it observes. It doesn’t rely on any internal concept of the world. These machines are the oldest forms of AI systems that have extremely limited capability. They imitate the human’s mind-ability to react to different kinds of stimuli. These machines do not have memory-based functionalities, which means such machines cannot use previously gained experiences to inform their present actions. That is, these machines do not have the ability to learn. These machines could only be used for automatically responding to a limited set of combinations of inputs, they cannot be used to rely on memory to improve their operations based on the same.

The perfect example of a reactive machine is Deep Blue, which is IBM’s chess-playing supercomputer that knocks down the GM Garry Kasparov in the late 1990s. Deep Blue identifies the pieces on a chessboard and knows each move, it makes predictions about what moves might be next for it and its opponent and can choose the most optimal move from the possibilities. It doesn’t have any idea of the past nor any memory of what has happened before. Deep Blue overlooks everything before the present instant. All it does is view the pieces on the chessboard as it stands currently and choose from possible next moves.

Limited memory machines are capable of learning from historical data to make decisions and are purely reactive machines. Nearly all existing applications come under this category of AI. All present-day AI systems, like those using deep learning, are usually trained by large volumes of training data stored in their memory to form a reference model for solving future problems. Like image recognition, AI is trained using 1000s of pictures and their labels. When a new image is scanned by such AI machines, it uses the training images as references to understand the contents of that image. And based on its learning experience, it labels new images with higher accuracy.

AI applications like chatbot virtual assistants and self-driving vehicles are all driven by the limited memory concept. Self-driving cars do some of this already. They observe other cars’ speed and direction. The observations that are made are added to the self-driving cars, pre-programmed designs, which include traffic lights, lane markings, and other important elements like curves or potholes on the road.

Theory of mind. It exists either as a concept or a work in progress. It is the next level of AI systems that researchers are currently interested in innovating. It will be able to understand better the entities it is interacting with by discerning their needs, beliefs, emotions, and thought processes. Achieving the level of the theory of mind requires development and other branches of AI. Because to understand human needs truly, these AI machines will have to understand humans as individuals whose minds can be fashioned by multiple factors.

Self-awareness is the final stage of AI development that currently exists. Hypothetically, self-awareness is an AI that has evolved to be so akin to the human brain to develop self-awareness. It will not only be able to understand and evoke emotions, but also have emotions, needs, beliefs, and potential desires of its own. Although the development of this AI can potentially boost progress by leaps and bounds, it can also potentially lead to catastrophe. Once an AI becomes self-aware, it would be capable of having ideas like self-preservation that may indirectly or directly spell the end of humanity.

The Turing Test

In 1950, Alan Turing introduced a test to check whether a machine can think like a human or not, which is known as the Turing test.

In this test, Alan Turing proposed that the computer can be said to be intelligent if it can mimic human response under specific conditions. The Turing test is based on the imitation game, with some alterations. It involves three participants in which one player is a computer. Another player is a human responder. And the third one is a human interrogator, who is isolated from the other two players, and his job is to find out which one is the machine out of the two. The interrogator is aware that one of them is a machine, but he needs to identify it on the basis of questions and the responses he gets from the players. The conversation between all players is through a keyboard and the screen. So the result would not depend on the machine’s ability to convert words as speech. The test outcome does not depend on the correct answer, but only on how it manages to answer like humans closely. The computer is granted to do everything possible before it’s a wrong identification by the interrogator. If the interrogator does not identify, which is the machine and which is the human, then the computer system passes the test successfully. And then it is said to be intelligent and has the ability to think like a human.

In 1991, the New York-based businessman Hugh Loebner announced prize money of $100,000 for the first computer that passes the Turing test. But to date, no AI program has come close to passing the undiluted Turing test.

In order to prepare this article, I studied the lectures from GlobalTechCouncil. They served me as a great source of inspiration and guidance through the process of discovering and understanding AI technologies.

Below, you can also find some valuable materials on this topic.

References

[1] https://www.history.com/news/7-early-robots-and-automatons

[2] https://www.nature.com/articles/d41586-018-05773-y

[3] https://www.theatlantic.com/technology/archive/

[4] https://digitalreality.ieee.org/publications/what-is-augmented-intelligence

[5] https://www.javatpoint.com/types-of-artificial-intelligence

[6] https://www.ibm.com/watson-health

[7] https://www.computerhope.com/issues/ch000984.htm

[8] https://medium.datadriveninvestor.com/what-is-hebbian-learning-3a027e8e4bbb

[9] https://www.csee.umbc.edu/courses/471/papers/turing.pdf

[10] https://en.wikipedia.org/wiki/Turing_test

[11] https://journals.sagepub.com/doi/abs/10.1177

[12] https://www.wired.com/2006/07/ai-reaches-the-golden-years/

[13] https://analyticsindiamag.com/story-eliza-first-chatbot-developed-1966/

[14] https://www.britannica.com/topic/Deep-Blue

[15] https://www.nytimes.com/2011/02/17/science/17jeopardy-watson.html

[16] https://www.youtube.com/watch?v=_5OfaGTwbiI

![AI#6 Knowledge representation in AI [EN]](https://neuroaugmentare.ro/wp-content/uploads/2022/07/artificial-intelligence-4389372_1920-218x150.jpeg)

![AI#5 Problem solving and Searching [EN]](https://neuroaugmentare.ro/wp-content/uploads/2022/05/artificial-intelligence-5866644_1920-218x150.jpeg)

![AI#4 Challenges of AI [EN]](https://neuroaugmentare.ro/wp-content/uploads/2022/03/technology-7111800_1920-218x150.jpg)

![AI#3 Advantages and Disadvantages of AI [EN]](https://neuroaugmentare.ro/wp-content/uploads/2022/03/artificial-intelligence-3706562_1920-218x150.jpeg)

![AI#2 Intelligent Agents [EN]](https://neuroaugmentare.ro/wp-content/uploads/2022/02/ai-6767497_1920-218x150.jpg)