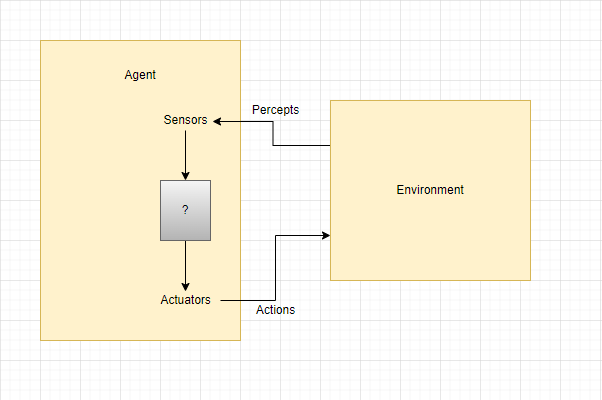

An intelligent agent (also known as IA) is an entity that takes a decision, that enables an artificial intelligence engine to be put into action. It can also be considered a software entity that conducts operations in the place of users or programs after sensing the environment. It uses actuators to initiate action in that environment. [1]

In the current article, we are presenting IAs, their types and their functionalities.

Usually, an AI system is defined as the study of the rational agent along with its environment. They sense the environment through sensors in acting on the environment or actually witness. These agents have mental properties such as knowledge, belief, intention, etc. [2]

An agent can be classified as:

- human agents

- robotic agents

- software agents

A human agent has sensory organs like eyes, nose, ears, skin, and tongue parallel to the subject and other organs such as hand, mouth and legs for effectiveness. [3]

A robotic agent replaces infrared rangefinders in cameras for the sensors in multiple actuators, and motors for effectors. [3]

The software agent has its programs and actions. They have inputs like keystrokes, file contents or network packages, that act as sensors. They display these inputs on the screen or into files or send network packets, acting as actions.

A simple agent program can be explained mathematically as a function f, known as the agent function that maps every possible percept [4] sequence to a possible action the agent can perform and to a coefficient that affects the potential actions. [5]

Understanding the intelligent agents.

An intelligent agent is an autonomous object that acts on an environment using actuators and sensors for achieving goals. These agents may be able to learn from their environment in order to achieve goals.

The main rule for an intelligent agent is that the agent must first have the ability to perceive the surrounding environment. Secondly, observations made must be used to make decisions. Thirdly, the decision should be used to generate an action. Finally, the action taken by an AI agent must be a rational action. Intelligent agents use sensors and produce the result using actuators.

Sensors would be things like ears or eyes (in humans case), generally used to observe the world that surrounds actuators for items like the voice that users use to respond to something they have heard with an intelligent agent. Sensors are things that cameras and microphones can receive. And actuators contain speakers and voice files that perform tasks such as transmitting information or putting other devices into action. [6]

The main task of an Artificial Intelligence Developer is to design an agent program that implements the agent function.

The Intelligent Agent structure is a combination of the agent program and its architecture. Here, architecture is machinery that in AI agent executes on whereas the agent program is an implementation of the agent function. An agent program is executed on the physical architecture to produce the agent function f. The agent function is used to map a person to action.

An example of an intelligent agent is a reflex machine (like a thermostat).

Types of agents

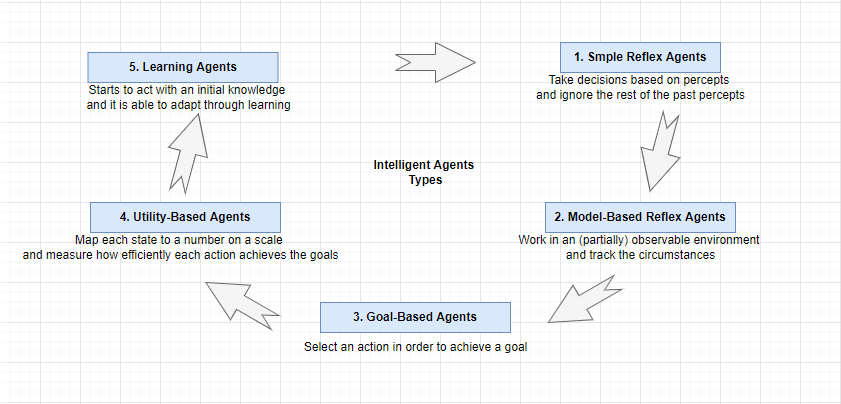

Agents can be classified into five categories, depending on their capabilities and degree of perceived intelligence: Simple Reflex Agents, Model-Based Agents, Goal-Based Agents, Utility-Based Agents and Learning Agents.

- Simple reflex agents – Take decisions based on percepts and ignore the rest of the past percepts

- Model-Based reflex agents – Work in an (partially) observable environment and track the circumstances

- Goal-based agents – Select an action in order to achieve a goal

- Utility-based agents – Map each state to a number on a scale and measure how efficiently each action achieves the goals

- Learning agents – Starts to act with an initial knowledge and it is able to adapt through learning

Simple reflex agents

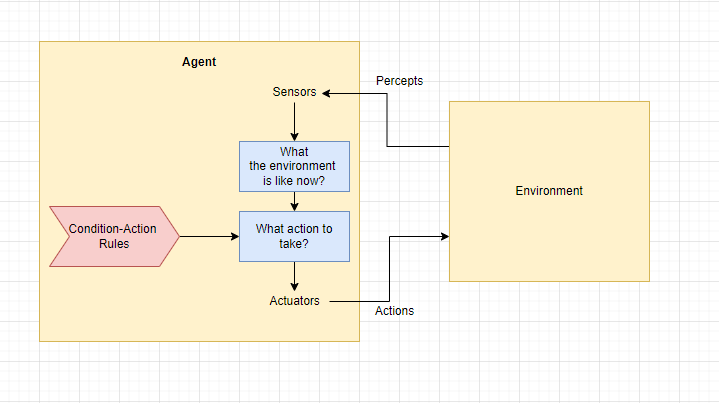

Simple reflex agents function only on the basis of the current human person avoiding the history of the percept basically percept history is what all agents have perceived to date.

Simple reflex agent function is based on the condition-action rule. That is, if the condition is valid, then the action is executed. else, not.

The condition-action rule maps a state to action.

Simple reflex behaviours occur even in more complex environments. The agent function only succeeds when the environment is fully observable. If simple reflex agents work in partially observable environments, the infinite loops are often unavoidable. But, it may be possible to escape from infinite loops if the agent can randomize its actions.

Shortcomings of Simple Reflex Agents are:

- limited intelligence

- no knowledge about nonperceptual parts of the state

- too big to generate and store

- the collection of rules that need to be updated if any change occurs in the environment.

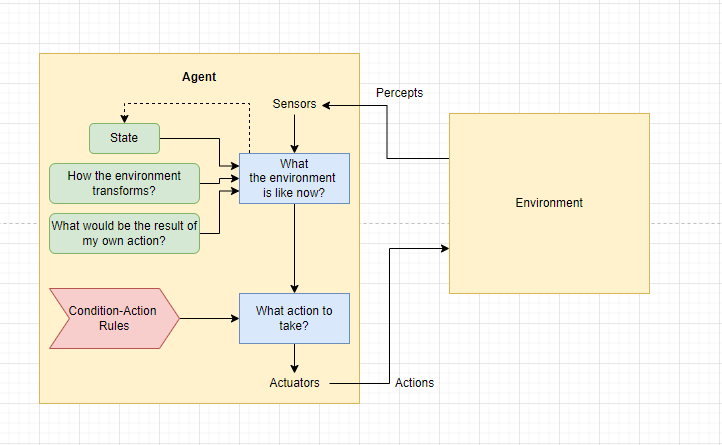

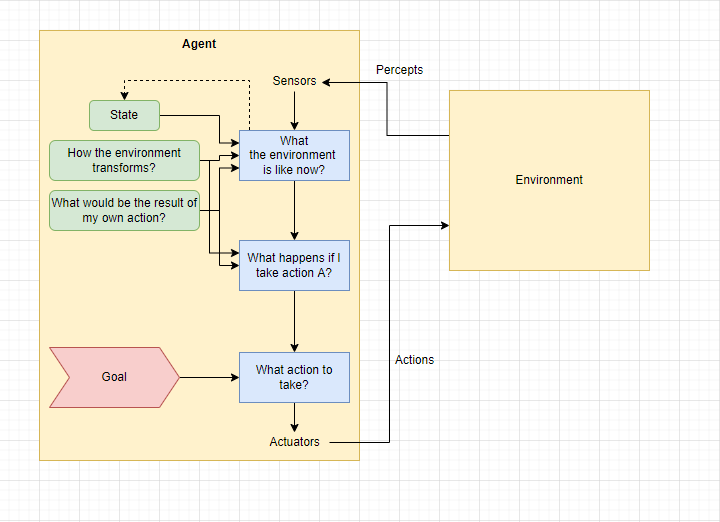

Model-Based reflex agents

The most efficient way to manage partial observability is to keep track of the part of the world it can perceive now, that is the agent should keep some kind of internal state that depends on the percept history and reflects at least some of the unobserved aspects of the current state. The Model-Based Reflex Agent works by finding a rule condition that matches the current situation.

They handle a partially observable environment issue of simple reflex agents by the use of models of the world. The agent needs to keep the record of the internal state that is adjusted by each process and generally depends on the percept history. The current state is maintained inside the agent that manages some kind of structure describing that part of the world that cannot observe and a model-based reflex agent for updating. The state requires information about how the agent’s actions affect the environment and how it transforms independently from the agent structure of the Model-Based Reflex Agent internal data showing how the current process is connected with the old internal state to produce the updated description of the current state depending on the agents model of how the world works.

The model-based agent keeps track of the environment state, along with a set of goals is seeking to achieve and then chooses an action that will eventually lead to the achievement of its goals. [7]

Goal-based agents

Goal-Based Agents knowing about the current state of the environment is not always sufficient to decide what to do although the current state description is provided. The agent needs some sort of goal information that describes the context or the results that are desirable. The agent program combines with this model to choose actions that achieve the goal. [8]

The goal-based agent takes decisions based on the goals; every action is proposed to reduce its distance from the goal. This provides the agent with a way to choose from multiple possibilities, selecting the one that reaches the goal state. The knowledge that supports the agent’s decisions is represented explicitly and can be modified easily, which makes goal-based agents more flexible. Sometimes, goal-based action selection is straightforward. [9] Like when a goal is achieved immediately from a single action.

Sometimes it will be more tricky, like when the agent has to consider a long series of twists and turns in order to attain a way to accomplish the goal. Searching and planning are the subfields of AI devoted to finding the action sequences that achieve the goal-based agent that emerges less efficient and is more flexible as the knowledge that sustains its decisions is expressed explicitly and can be modified. [10] These agents behaviour can easily be changed to go to a different destination simply by specifying that destination as the goal. [11]

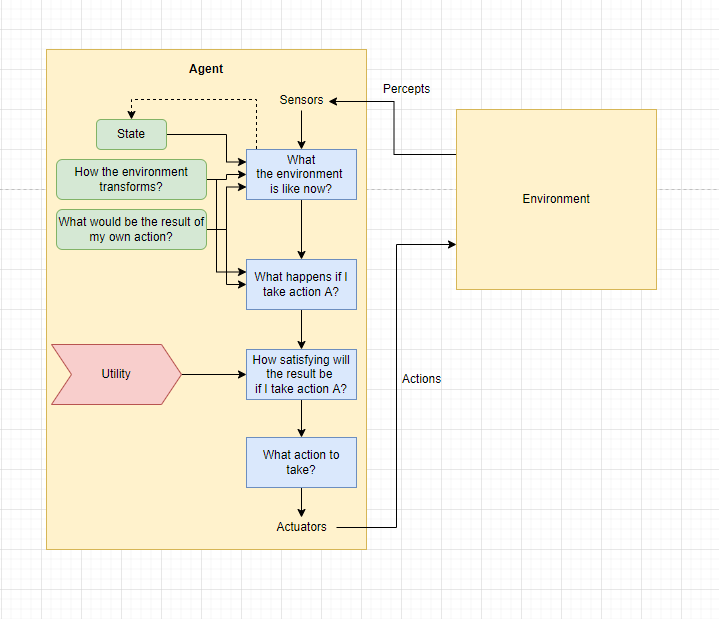

Utility-based agents

Sometimes isolated goals are not sufficient to generate high-quality behaviour in most environments. For example, many action sequences will get a cab to his destination that is achieving the goal. But some are safer, quicker, more reliable or cheaper than others.

Utility-Based Agents have agents that are developed having their end-users as building blocks. If from multiple possible alternatives, we need to decide the best Utility-Based agents are used. These agents choose their actions based on the preference for each state. Sometimes earning the desired goal is not enough and agent happiness should be taken into consideration. Utility describes how heavy is due to the uncertainty in the world. A utility agent chooses the action that maximizes expected utility. An agent’s utility function is basically an internalization of a performance measure, yet external performance measure, internal utility function or an agreement that an agent that accepts the actions to maximize the utility will be rational.

According to the external performance measure, a utility function maps the state into a number that explains the associated degree of happiness. In two types of cases, goals are unfit, but a utility-based agent can still make rational decisions.

Firstly, when there are conflicting goals, only some of them can be achieved. The utility function specifies the appropriate trade-off.

Secondly, when there are several goals that the agent can aim for, none of which can be achieved with certainty. But the utility grants a way in which the likelihood of success can be weighed counter to the importance of the goal. [12]

The agent that holds an explicit utility function is to make rational decisions with a general-purpose algorithm that does not depend on the precise utility function that is being maximized. The Utility-Based agent can model and keep track of its environment and tasks that involve a great opportunity to research perception, representation, reasoning and learning.

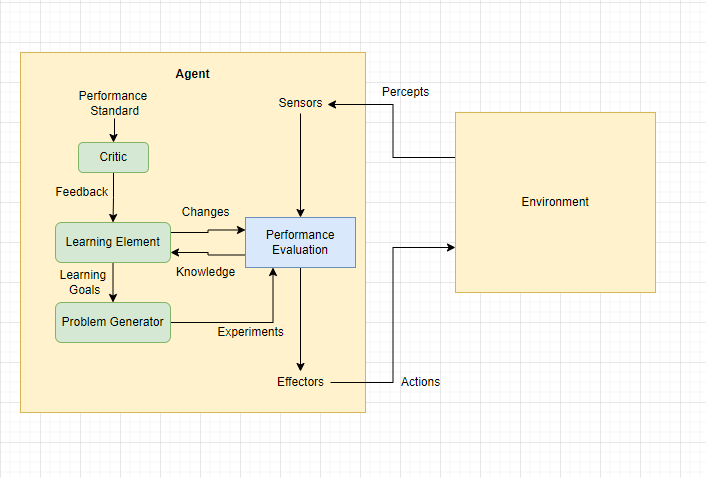

Learning agents

So far, I have not explained how the agent programs came into being in different fields of AI.

This is now the preferred method for creating state of the art systems. Learning has another advantage, as we noted previously, allowing an agent to work in initially unknown environments and become more experienced than its initial knowledge soli might allow. A learning agent is the type of agent that learns from past experiences and has learning capabilities. It starts acting with the basic knowledge and then acts to adapt automatically to learning.

The Learning Agent mainly has four conceptual components that are: the critic element, the learning element, the performance evaluation element and the problem generator. [13]

The learning element is accountable for making improvements by learning from the environment. The learning element takes feedback from critics and describes how well the agent is doing with respect to a fixed performance standard.

The performance evaluation element is responsible for selecting external actions. It takes the percepts and decides on actions. The configuration of the learning element depends very much on the configuration of the performance element. In general, the learning element uses feedback from the critic about how well the agent is performing and learns how the performance element should be altered to do better in the future.

The problem generator is accountable for suggestive actions that will lead to new and edifying experiences. If the performance evaluation element had its space, it would keep performing the actions that are best given what it knows. But if the agent is ready to explore alternatives and some likely sub-optimal actions in the short run that might discover much better actions in the long run, the problem generators task is to recommend these exploratory actions.

In order to prepare this article, I studied the lectures from GlobalTechCouncil. They served me as a great source of inspiration and guidance through the process of discovering and understanding AI technologies.

Below, you can also find some valuable materials on this topic.

References

[1] https://www.section.io/engineering-education/intelligent-agents-in-ai/

[2] https://www.javatpoint.com/agents-in-a

[3] https://www.tutorialspoint.com/artificial_intelligence/artificial_intelligence_agents_and_environments.htm

[4] https://gungorbasa.com/intelligent-agents-dc5901daba7d

[5] https://www.cpp.edu/~ftang/courses/CS420

[6] https://study.com/academy/lesson/intelligent-agents-definition-types-examples.html

[7] https://web.cs.hacettepe.edu.tr/~ilyas/Courses/VBM688/lec02_intelligentAgents.pdf

[8] http://web.pdx.edu/~arhodes/ai5.pdf

[9] https://www.geeksforgeeks.org/agents-artificial-intelligence/

[10] https://people.eecs.berkeley.edu/~russell/aima1e/chapter02.pdf

[11] https://medium.datadriveninvestor.com/artificial-intelligence-agents-and-environments-9b93d73791f3

[12] https://www.jstor.org/stable/1914185

[13] https://study.com/academy/lesson/learning-agents-definition-components-examples.html

![AI#6 Knowledge representation in AI [EN]](https://neuroaugmentare.ro/wp-content/uploads/2022/07/artificial-intelligence-4389372_1920-218x150.jpeg)

![AI#5 Problem solving and Searching [EN]](https://neuroaugmentare.ro/wp-content/uploads/2022/05/artificial-intelligence-5866644_1920-218x150.jpeg)

![AI#4 Challenges of AI [EN]](https://neuroaugmentare.ro/wp-content/uploads/2022/03/technology-7111800_1920-218x150.jpg)

![AI#3 Advantages and Disadvantages of AI [EN]](https://neuroaugmentare.ro/wp-content/uploads/2022/03/artificial-intelligence-3706562_1920-218x150.jpeg)

![AI#1 What is Artificial Intelligence? [EN]](https://neuroaugmentare.ro/wp-content/uploads/2022/02/robot-hand-finger-ai-background-technology-graphics-218x150.jpeg)